Creating Ubuntu Bedrock Minecraft image with Docker

This article is written to be less of a walk through and more of an account of the practical project as a side-by-side with the Kubernetes Mini-Series. This project will continue as I learn more about Docker, Kubernetes, AKS, and Azure DevOps. It'll bring all the pieces together in a real-world type of way with some really cool expected results. Ultimately the goal is to host a Minecraft server in AKS in a highly-available way. To start with I'll be creating a new image of Ubuntu Server with the Bedrock version of Minecraft Server.

by running the list command from both server and client screens, you can see my character listed on both.

While this works great at the VM level, I'm eventually shooting to have this run in Kubernetes, so I opted to create a docker image I could use for that. Before I can do any of that, I have to build and test the image in docker.

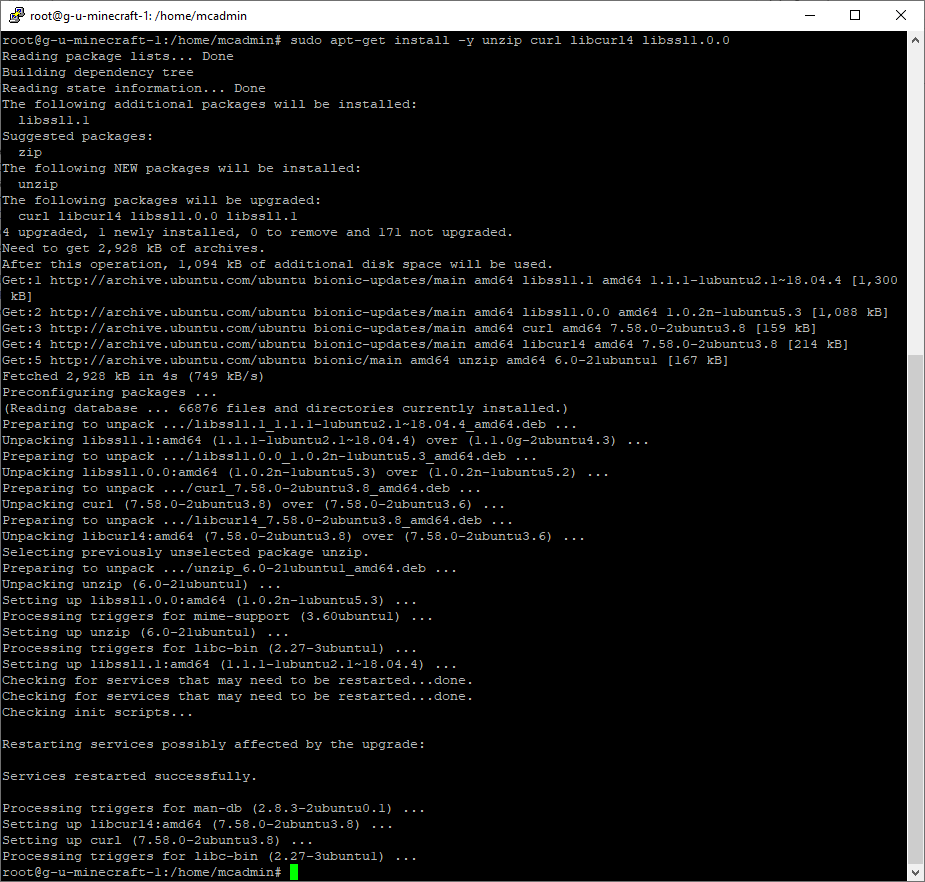

Again, the output on this one is pretty long since it goes through all the trouble of updating all the packages. This will be important later for keeping things fresh during automation. Eventually the build will make it all the way through.

Like I said, eventually this will be my project to deploy this image into AKS as a highly-available service, so that's why it's named as such.

In the pipeline, I created a stage to build/push my image.

This is where things get tricky. The next couple jobs perform the build and push tasks. I needed a few different things set up to get this working.

When I started this project, I really was just trying to find a way to make the learning process more fun and relatable. I wanted to stand up an application that wasn't just wordpress with mysql, and something I could enjoy working on well after I got a handle on the basics.

Here's what we'll be covering in this article:

- Building the Bedrock server on Ubuntu for testing

- Building the image with a dockerfile

- Tagging and pushing the image to docker repo

- Running the server in docker to test for functionality

- Bonus: Creating a build pipeline in Azure DevOps to continuously check for and build the newest version of Bedrock Minecraft server on the newest version of Ubuntu, and push it to the repo.

Theres a lot to unpack here and I'll share everything I learned while doing all of these things. I won't be deep-diving on the Azure DevOps sections, just outlining how I set up my pipeline. In the future I would like to write an article on how to do a few different things within AzDO, so keep checking back if that's you're interest.

I started with working out a way to build a Minecraft server I could connect to with devices that support the Bedrock version of Minecraft, which I have on PS4, Switch, as well as my Windows desktop. This way I could play on my server from any platform I happened to be on at the time. The first thing I did was hit google for docker images of the Bedrock version of Minecraft server. As it turns out, nobody has made one. So here, the process really started from building and testing my own server from scratch.

The first thing I had to do was set up a VM with the newest version of the installer. It was simple enough. After building the VM in Hyper-V with the Ubuntu 18.04 ISO, I had to download the installer. Since there's no browser, I used a curl command to download the file and save it. Right before we do that we'll want to install a couple dependencies for openssl so that the server can run, and unzip the installer.

sudo apt-get install -y unzip curl libcurl4 libssl1.0.0

Now we can download the server software:

sudo curl https://minecraft.azureedge.net/bin-linux/bedrock-server-1.12.1.1.zip --output bedrock-server.zip

Then simply unpack the zip and run the install

sudo unzip bedrock-server.zip -d bedrock-server

This one will give you a pretty verbose output of all the files as it unpacks. It was too long to get the whole thing into a reasonable screenshot.

After we're unzipped we simply navigate to the /bedrock-server directory and start the server.

At this point, any commands you enter will be against the actual server itself. Once this was up and running I was able to test the server functionality by actually connecting to the container.

cd bedrock-server

sudo ./bedrock_server

At this point, any commands you enter will be against the actual server itself. Once this was up and running I was able to test the server functionality by actually connecting to the container.

by running the list command from both server and client screens, you can see my character listed on both.

While this works great at the VM level, I'm eventually shooting to have this run in Kubernetes, so I opted to create a docker image I could use for that. Before I can do any of that, I have to build and test the image in docker.

First thing to do is create the dockerfile, it'll need to do the things I did above, but before that I'll have it update the OS with and apt update command. With the dockerfile, we're basically just going to execute all the commands we did before, with some additional items for cleanup.

FROM ubuntu:latest

RUN apt-get update

RUN apt-get install -y unzip curl libcurl4 libssl1.0.0

RUN curl https://minecraft.azureedge.net/bin-linux/bedrock-server-1.12.1.1.zip --output bedrock-server.zip

RUN unzip bedrock-server.zip -d bedrock-server

RUN rm bedrock-server.zip

WORKDIR /bedrock-server

ENV LD_LIBRARY_PATH=.

CMD ./bedrock_server

Then we can go ahead and create a build for this image to make sure everything is set up properly.

docker build . -t ubuntu-minecraft

Again, the output on this one is pretty long since it goes through all the trouble of updating all the packages. This will be important later for keeping things fresh during automation. Eventually the build will make it all the way through.

Now all we have to do is make a docker container to port forward the game service port externally so I can connect to it from my Minecraft client on my desktop:

docker run -d -p 19132:19132/udp ubuntu-minecraft

The downside here is that there is that docker containers are inherently built to be light-weight and redeployable. Therefore, there is no storage persistence, which translates to no world persistence. If the service ever goes down, you lose all progress. To fix that, you'd have to create a persistent volume in docker to attach to the container.

Turns out creating a persistent volume is fairly easy in docker:

Then we can recreate our minecraft server with the attached storage.

docker run -d -p 19132:19132/udp --mount source=minecraftworld,target=/bedrock-server ubuntu-minecraft

Then we can test with persistence by making some changes in the world, then killing the container and re-running the above command to recreate. You should find that you were right where you left off when you closed the game. Realistically, you could stop here and enjoy your containerized Minecraft server environment. However, what happens when the next minecraft update drops and you need to update your server? You'd have to update your dockerfile, rebuild your image and then kill/run your new docker container.

While not a huge inconvenience, I'd rather not have to babysit the download page to see if there's a new version of the server software. That's where build automation and repositories come in. So now we know our latest build is fully functional and is ready to be pushed to our repo. If you don't already have a repository for your images, or a docker hub account, you can find the instructions for both in my Intro to Kubernetes - What is Docker? article.

So I'm going to show you how I automated the build process to automatically build a new image and push it to the repo every time the Minecraft website updates with a new version of the Minecraft Bedrock server software.

So I'm going to show you how I automated the build process to automatically build a new image and push it to the repo every time the Minecraft website updates with a new version of the Minecraft Bedrock server software.

The first thing I had to do was write a script to get the download link from the minecraft server download page. At first I tried to do this with the ie com object in powershell. Turns out that was way too convoluted and wasn't going to work out the way I'd hoped because there was no way to get the link element due to how it was tagged in HTML. I realized that the curl command brought in the same info, and had a "links" attribute I could execute a search on to get the right url. This shortened my script by a lot. I needed the specific Bedrock version, it was hard to find without google's help, but we got there. Here's a powershell one-liner that will grab the URL that triggers the download of the zip file.

$url = ((curl https://www.minecraft.net/en-us/download/server/bedrock/).links | where {$_.href -like "*azureedge.net/bin-linux*"}).href

Now comes the automation. It took a lot of trial and error, but here's what I settled on. I built a release pipeline in Azure DevOps to run on a schedule running the script above. I have it outputting the current url into an Azure key vault as a secret so that I can use that to compare to the url every time it runs. If it changes, it'll update the secret. After that It'll trigger the build of the image, and push to my repo. Here's the breakdown:

In my new MinecraftUbuntu project in Azure Devops, I created a new Release Pipeline.

In my new MinecraftUbuntu project in Azure Devops, I created a new Release Pipeline.

Like I said, eventually this will be my project to deploy this image into AKS as a highly-available service, so that's why it's named as such.

In the pipeline, I created a stage to build/push my image.

This stage really does 2 main things: Check the download URL for new versions, and build/push my new image to the repository. But in reality, this is 5 different jobs on 2 different agents.

The first job grabs my key vault holding my current download url and imports the secrets. I have a key vault in my Azure subscription called "ubuntuurl". And within that keyvault a secret called "currentminecraftubuntudownloadurl". When the first job runs, it imports that secrets key and value.

In order to make this work, I had to grant access to the service principle that will perform the lookup on behalf of the pipeline.

You can see that it has the name of my Azure DevOps project, so it should be fairly easy to search for when adding the policy.

The second job runs a script utilizing the command above to get the url from the minecraft site:

$oldurl = "$(currentminecraftubuntudownloadurl)"

Write-host "Imported $oldurl as url from secret"

$doc = curl https://www.minecraft.net/en-us/download/server/bedrock/

$downloadlink = ($doc.links | where {$_.href -like "*azureedge.net/bin-linux/*"}).href

write-host "Found $downloadlink from mincraft website"

if ($downloadlink -match $oldurl) {

Write-output "URL is not updated since the last check"

}

else {

write-host "New Download URL found"

Write-Output ('##vso[task.setvariable variable=installerurl]{0}' -f $downloadlink)

$downloadlink = $downloadlink | ConvertTo-SecureString -AsPlainText -Force

set-AzKeyVaultSecret -VaultName ubuntuurl -Name currentminecraftubuntudownloadurl -SecretValue $downloadlink

write-host "New Download URL found"

}

Nothing too difficult. It does a quick check to see if the URL has change, and if it hasn't, the entire job stops dead. Note the output of the installerurl variable. It's needed in the next task where I needed to set the release pipeline variable to that url so that it can be handed off to the next agent for it's jobs.

The next job is important due to the fact that it outputs the url to be used to download the minecraft server software into a pipeline variable. I got the info from this article on how to do update a pipeline variable: https://stefanstranger.github.io/2019/06/26/PassingVariablesfromStagetoStage/

Pay close attention to the security settings portion that allows permissions to Manage Releases.

Here's the job as it sits in my pipeline:

#region variables

$ReleaseVariableName = 'installerdownloadurl'

$releaseurl = ('{0}{1}/_apis/release/releases/{2}?api-version=5.0' -f $($env:SYSTEM_TEAMFOUNDATIONSERVERURI), $($env:SYSTEM_TEAMPROJECTID), $($env:RELEASE_RELEASEID) )

#endregion

#region Get Release Definition

Write-Host "URL: $releaseurl"

$Release = Invoke-RestMethod -Uri $releaseurl -Headers @{

Authorization = "Bearer $env:SYSTEM_ACCESSTOKEN"

}

#endregion

#region Output current Release Pipeline

Write-Output ('Release Pipeline variables output: {0}' -f $($Release.variables | ConvertTo-Json -Depth 10))

#endregion

#region Update StageVar with new value

$release.variables.($ReleaseVariableName).value = "$(installerurl)"

#endregion

#region update release pipeline

Write-Output ('Updating Release Definition')

$json = @($release) | ConvertTo-Json -Depth 99

Invoke-RestMethod -Uri $releaseurl -Method Put -Body $json -ContentType "application/json" -Headers @{Authorization = "Bearer $env:SYSTEM_ACCESSTOKEN" }

#endregion

#region Get updated Release Definition

Write-Output ('Get updated Release Definition')

Write-Host "URL: $releaseurl"

$Release = Invoke-RestMethod -Uri $releaseurl -Headers @{

Authorization = "Bearer $env:SYSTEM_ACCESSTOKEN"

}

#endregion

#region Output Updated Release Pipeline

Write-Output ('Updated Release Pipeline variables output: {0}' -f $($Release.variables | ConvertTo-Json -Depth 10))

#endregion

The parts that are important are:

- The $releasevariablename at the top. This is what you ultimately want to pass into the next agent and how you'll reference it there.

- The region to Update StageVar with new value, specifically you can see I'm calling the installerurl variable and feeding it's value into the release pipeline variable.

The rest is mostly using REST API calls to the pipeline to make the changes.

When those two jobs run, it outputs the url for the download into the pipeline variables to be handed off to the next agent.

- A service connection to my docker hub

- An existing docker repo

- A modified dockerfile that accepts arguments

First you'll need a repo to push the image to. If you've been following along on this article, there's a link to one of my other articles with instructions for creating a Docker account as well as a repository.

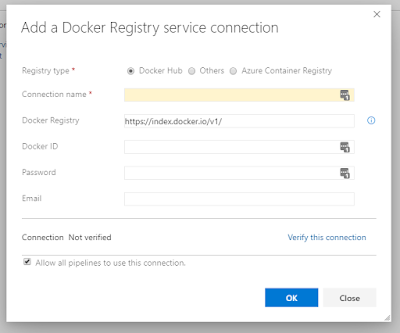

Then we'll continue on with the service connection. In Azure DevOps, you'll need to create a new service connection by clicking on Project Settings at the very bottom left corner of the page. Then in "Service Connections" click "New service connection". In the drop-down, look for Docker Registry and click on that. You'll want to make sure that the Registry type is set to Docker Hub (if you're using docker as your repo).

Easy enough to fill in the rest of the info there with your information.

Then the modified dockerfile. Our plan is to feed in our url variable that we grabbed earlier into our dockerfile build so that we can programatically retrieve that url each time the build runs. So we'll take the dockerfile we had earlier and make a couple small changes to it:

FROM ubuntu:latest

ARG download_url

RUN apt-get update

RUN apt-get install -y unzip curl libcurl4 libssl1.0.0

RUN curl ${download_url} --output bedrock-server.zip

RUN unzip bedrock-server.zip -d bedrock-server

RUN rm bedrock-server.zip

WORKDIR /bedrock-server

ENV LD_LIBRARY_PATH=.

CMD ./bedrock_server

The most important change here is the addition of the ARG download_url line, and the change to the curl command to pipe in the value of that argument. Here's where I need to spend a moment introducing the concept of a pipeline artifact in Azure DevOps. The simplest way to explain an artifact is to say that it's an input object. Almost anything can be an artifact, a dockerfile, a yaml, an arm template, etc. And we'll have to connect these artifacts to our pipeline so that our jobs can reference them.

In my MinecraftUbuntu project, I have a git repo that holds my dockerfile. You can see that I can edit the file in-line, which is pretty cool.

If I then go back to my pipeline and click "add" under atrifacts. I can add in my azure repo:

And now my artifact is linked.

Then, returning to my build stage I've added in a build and push task. Lets take a look at those.

In my build task, I've specified that my dockerfile is the one we left in our repo. The Container registry field points to the service connection we created previously, and the container repository field has the repo path for our images in our docker hub account. For tags, I've left in the default build release number, and added in "latest" so that it automatically updates the default image when you call the repo without a tag. In the Arguments, I've passed in the installerdownload variable into the build arguments:

Running this task on it's own would likely break your image since there variable for the URL only gets set during the time the release runs. It's not a persistent variable, and would not get passed in if you ran this task solo.

The last task, "Push", is as simple as it sounds. It executes a docker push command to your repo with the tags in the job:

Again, it uses the docker service connection and the docker repo, then appends any tags in the tags field.

Now that we have our pipeline configured, we just need to configure our scheduling so that this runs every day.

On your pipeline, your stages have a little lightning bolt icon that will allow you to configure the properties of the stage:

Clicking on that icon will open up the conditions that have to be met for the stage to run.

Here you can see that I've selected to enable the Schedule option so that the stage is allowed trigger at 6:00 AM M-F. I figure I don't want to trigger the updates during peak hours so this is what I chose. Notice I said the pipeline is allowed to trigger, this setting is only configuring the conditions that must be met for this stage to fire. Next we'll configure the actual trigger schedule. Under the Artifacts, you'll see a button that says "Schedule not set" with a little clock icon:

Clicking the clock icon will open the scheduling pane so that we can configure the options like we did previously with the stage conditions:

And with that, we can set and forget. Now everytime the URL changes on the minecraft download page, our image will get re-built and pushed to the repo. The next steps after this in my project are to build out the AKS cluster in Azure and develop a custom helm chart to deploy this image with some external load balancers in front of them, with persistent storage. Once I get all that figured out, I'll be back with another article on how to build it. Until next time!

Sands Casino New Jersey | Newest Online Casino in NJ

ReplyDeleteRead a guide 인카지노 to the best online casino in New Jersey. It's the newest casino and sportsbook available in New septcasino Jersey. Read more deccasino